A clarification on why novelty-driven execution introduces fragility.

echnological change is constant.

What varies is how organisations respond to it.

Execution failures rarely occur because a technology is new.

They occur when execution is reshaped around perceived shifts, rather than governed structure.

Across recent years, the same failure patterns repeat — regardless of whether the technology is labelled AI, decentralisation, or edge computing.

The issue is not adoption.

It is misplaced authority.

1. When Automation Is Introduced Without Execution Boundaries

Automation does not fail because it is immature.

It fails when processes are automated before they are stable, observable, and accountable.

Execution becomes fragile when:

- optimisation precedes understanding

- systems learn faster than teams can intervene

- responsibility becomes opaque

Automation must follow structure — never replace it.

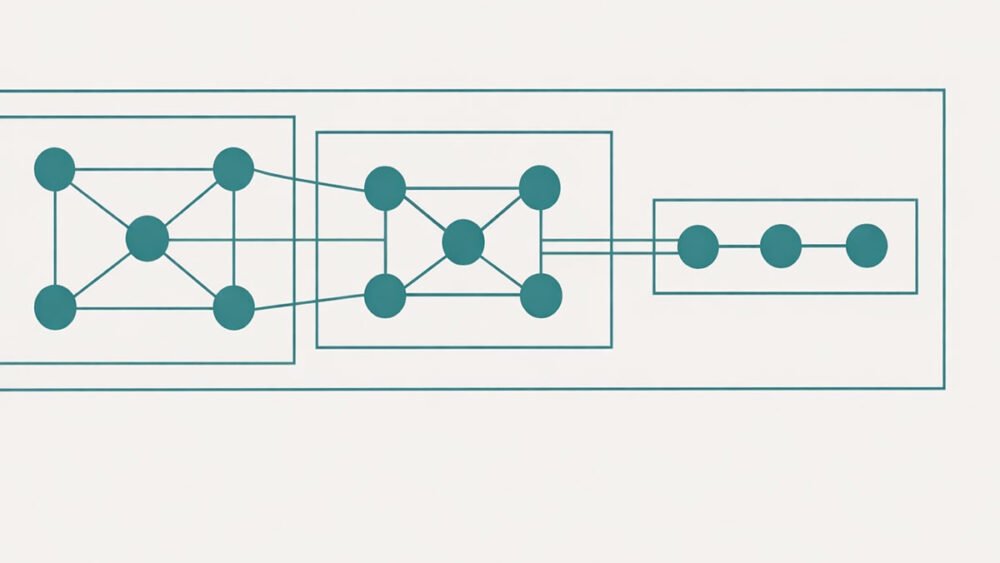

2. When Ownership Models Change Faster Than Governance

Shifts in data ownership, identity, or control introduce risk when governance does not move at the same pace.

Execution degrades when:

- authority is distributed without accountability

- trust is delegated to systems without enforcement

- operational responsibility becomes ambiguous

Decentralisation without governance increases failure surface, not resilience.

3. When Infrastructure Is Rebuilt Around Novelty, Not Load

Changes in infrastructure location — centralised or distributed — do not determine reliability.

Execution fails when:

- systems are designed for possibility rather than pressure

- performance assumptions are untested

- proximity replaces observability

Infrastructure decisions must be driven by failure analysis, not architectural fashion.

Execution remains reliable only when:

-

decisions are validated before implementation

-

structure precedes automation

-

governance precedes scale

Technology does not reshape execution.

Execution discipline determines whether technology holds.

Reliability is not achieved by anticipating change, but by constraining it.